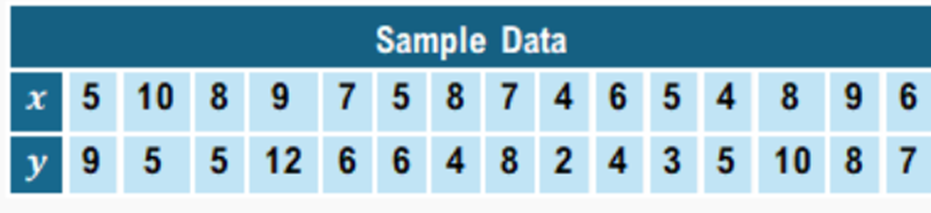

Using the sample data below, create a confidence interval for to see if there is evidence that there is a positive correlation between and with .

Table of contents

- 1. Intro to Stats and Collecting Data1h 14m

- 2. Describing Data with Tables and Graphs1h 55m

- 3. Describing Data Numerically2h 5m

- 4. Probability2h 16m

- 5. Binomial Distribution & Discrete Random Variables3h 6m

- 6. Normal Distribution and Continuous Random Variables2h 11m

- 7. Sampling Distributions & Confidence Intervals: Mean3h 23m

- Sampling Distribution of the Sample Mean and Central Limit Theorem19m

- Distribution of Sample Mean - Excel23m

- Introduction to Confidence Intervals15m

- Confidence Intervals for Population Mean1h 18m

- Determining the Minimum Sample Size Required12m

- Finding Probabilities and T Critical Values - Excel28m

- Confidence Intervals for Population Means - Excel25m

- 8. Sampling Distributions & Confidence Intervals: Proportion1h 25m

- 9. Hypothesis Testing for One Sample3h 57m

- 10. Hypothesis Testing for Two Samples4h 50m

- Two Proportions1h 13m

- Two Proportions Hypothesis Test - Excel28m

- Two Means - Unknown, Unequal Variance1h 3m

- Two Means - Unknown Variances Hypothesis Test - Excel12m

- Two Means - Unknown, Equal Variance15m

- Two Means - Unknown, Equal Variances Hypothesis Test - Excel9m

- Two Means - Known Variance12m

- Two Means - Sigma Known Hypothesis Test - Excel21m

- Two Means - Matched Pairs (Dependent Samples)42m

- Matched Pairs Hypothesis Test - Excel12m

- 11. Correlation1h 24m

- 12. Regression1h 50m

- 13. Chi-Square Tests & Goodness of Fit2h 21m

- 14. ANOVA1h 57m

12. Regression

Inferences for Slope

Struggling with Statistics?

Join thousands of students who trust us to help them ace their exams!Watch the first videoMultiple Choice

Using the sample data below, run a hypothesis test on to see if there is evidence that there is a positive correlation between and with .

A

Reject and conclude that there is a positive correlation between and and that .

B

Fail to reject H0 since there is enough evidence to suggest β>0, but not enough evidence to suggest positive linear correlation between x and y.

C

Fail to reject since there is not enough evidence to suggest and not enough evidence to suggest positive linear correlation between and .

D

Reject H0 since there is not enough evidence to suggest β>0 and not enough evidence to suggest positive linear correlation between x and y.

Verified step by step guidance

Verified step by step guidance1

Step 1: State the hypotheses for the test. The null hypothesis is that the slope \( \beta = 0 \) (no linear relationship), and the alternative hypothesis is that \( \beta > 0 \) (positive linear relationship). Formally, \( H_0: \beta = 0 \) and \( H_a: \beta > 0 \).

Step 2: Calculate the sample statistics needed for the test: the means of \( x \) and \( y \), the sample variances, and the sample covariance. Use the formulas:

\[\bar{x} = \frac{1}{n} \sum_{i=1}^n x_i, \quad \bar{y} = \frac{1}{n} \sum_{i=1}^n y_i\]

\[S_{xx} = \sum (x_i - \bar{x})^2, \quad S_{xy} = \sum (x_i - \bar{x})(y_i - \bar{y})\]

Step 3: Calculate the estimated slope \( b_1 \) of the regression line using the formula:

\[b_1 = \frac{S_{xy}}{S_{xx}}\]

This \( b_1 \) is the point estimate for \( \beta \).

Step 4: Compute the standard error of the slope estimate \( SE_{b_1} \). First, calculate the residual sum of squares and then use:

\[SE_{b_1} = \sqrt{\frac{SSE}{(n-2) S_{xx}}}\]

where \( SSE = \sum (y_i - \hat{y}_i)^2 \) and \( \hat{y}_i = b_0 + b_1 x_i \).

Step 5: Calculate the test statistic \( t \) for the slope:

\[t = \frac{b_1 - 0}{SE_{b_1}}\]

Compare this \( t \)-value to the critical value from the \( t \)-distribution with \( n-2 \) degrees of freedom at significance level \( \alpha = 0.01 \). If \( t \) is greater than the critical value, reject \( H_0 \); otherwise, fail to reject \( H_0 \).

4:30m

4:30mWatch next

Master Hypothesis Test for the Slope of a Regression Line with a bite sized video explanation from Patrick

Start learningRelated Videos

Related Practice

Multiple Choice

Inferences for Slope practice set